Introduction

In the rapidly evolving world of technology, Graphics Processing Units (GPUs) have emerged as the backbone for advancements in Artificial Intelligence (AI), Machine Learning (ML), and Deep Learning. These specialized electronic circuits are designed to accelerate the processing of images and videos, but their application has transcended far beyond, becoming the backbone of complex computations in various scientific and commercial domains.

As the demand for more computational power continues to grow, the role of GPUs has become increasingly critical. Enterprises are now faced with the challenging task of selecting the most suitable GPU to meet their specific needs, a decision that could significantly impact the efficiency and effectiveness of their operations.

This blog aims to shed light on the yet-to-be-released NVIDIA L40S, a GPU that promises groundbreaking features and performance capabilities. To provide a comprehensive understanding, we will compare the theoretical specifications and potential of the L40S with two other high-performing, extensively tested GPUs: the NVIDIA H100 and A100.

The Rise of Cloud GPUs

In today's data-driven world, the demand for computational power is at an all-time high. Artificial Intelligence (AI), Machine Learning (ML), and Deep Learning are no longer futuristic concepts but essential technologies that drive everything from automated customer service to advanced data analytics. At the heart of these technologies lie Graphics Processing Units (GPUs), specialized hardware designed to handle the complex calculations that these advanced applications require.

The Cost Barrier

While the capabilities of modern GPUs are nothing short of revolutionary, their high costs often serve as a significant barrier to entry, particularly for small and medium-sized enterprises (SMEs). The financial burden of acquiring, maintaining, and upgrading a state-of-the-art GPU can be substantial. This cost factor often limits smaller organizations from fully leveraging the capabilities of advanced AI and ML technologies, putting them at a competitive disadvantage.

The Cloud Solution

This is where Cloud GPUs come into play, leveling the playing field by offering high-end computational power as a service. Cloud-based GPU solutions eliminate the need for a large upfront investment, offering instead a pay-as-you-go model that provides businesses with the flexibility to scale their operations according to their needs. This approach democratizes access to essential technologies, making it feasible for organizations of all sizes to undertake complex computational tasks. The benefits of Cloud GPUs extend beyond cost savings. The cloud model inherently offers unparalleled scalability and flexibility, allowing organizations to adapt to project requirements dynamically. Whether you're a startup looking to run initial ML models or an established enterprise aiming to scale your AI-driven analytics, Cloud GPUs provide the computational muscle you need, precisely when you need it.

Future-Proofing with Cloud GPUs

E2E Cloud stands as a prime example of how cloud-based solutions can make high-end computational power accessible. The platform not only offers the tried-and-tested NVIDIA A100 and H100 GPUs but also plans to include the yet-to-be-released and promising NVIDIA L40S. This range of offerings allows businesses to choose the GPU that best fits their specific needs, all while benefiting from a cost-effective, scalable model. As we look towards a future where AI and ML technologies are set to become even more integral to business operations, the role of GPUs will only grow in importance. Cloud GPUs offer a sustainable, scalable way for organizations to stay ahead of the curve, providing the tools needed to innovate and excel in an increasingly competitive landscape.

Spotlight on NVIDIA L40S

Introduction to L40S

The NVIDIA L40S is a highly anticipated GPU, expected to be released by the end of 2023. While its predecessor, the L40, has already made a significant impact in the market, the L40S aims to take performance and versatility to the next level. Designed with the Ada Lovelace architecture, this GPU is being touted as the most powerful universal GPU for data centers, offering unparalleled capabilities for AI training, Large Language Models (LLMs), and multi-workload environments.

Specifications

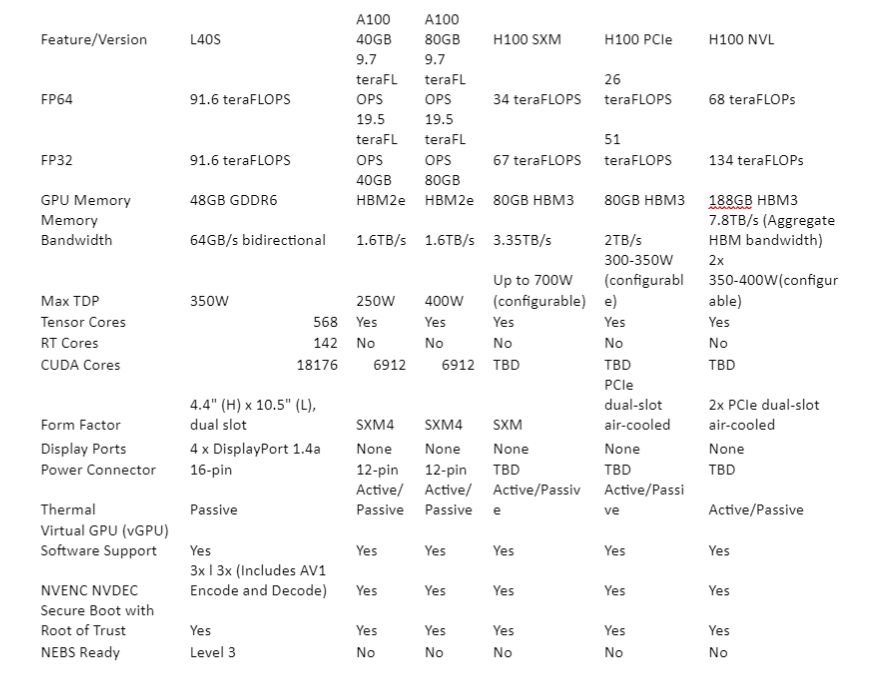

The L40S comes with an impressive set of specifications:

These specs make it a formidable competitor, even when compared theoretically to the tested A100 and H100 GPUs.

Version-Specific Features

As of now, the L40S is expected to be released in a single version. This is in contrast to the A100, which comes in 40GB and 80GB versions, and the H100, which has three different versions: H100 SXM, H100 PCIe, and H100 NVL. Each of these versions offers different performance metrics and is designed for specific use-cases, making the choice of GPU a critical decision for enterprises.

Theoretical vs Practical Performance

It's important to note that while the L40S offers promising theoretical capabilities, it has yet to be tested in real-world scenarios. Both the A100 and H100 have undergone extensive testing and have proven their reliability and performance. Therefore, while the L40S promises groundbreaking features, its practical performance remains to be seen.

Use-Cases and Industries

The L40S is designed to be a versatile GPU, capable of handling a variety of workloads. Its high computational power makes it ideal for AI and ML training, data analytics, and even advanced graphics rendering. Industries like healthcare, automotive, and financial services stand to benefit significantly from the capabilities of this GPU. One of the standout features of the L40S is its ease of implementation. Unlike other GPUs that may require specialized knowledge or extensive setup, the L40S is designed to be user-friendly, allowing for quick and straightforward integration into existing systems.

NVIDIA H100: A Quick Overview

Introduction to H100

The NVIDIA H100 Tensor Core GPU is a powerhouse designed for accelerated computing, offering unprecedented performance, scalability, and security for data centers. Built on the NVIDIA Hopper architecture, the H100 is engineered to tackle exascale workloads and is particularly adept at handling Large Language Models (LLMs) and High-Performance Computing (HPC).

Versions and Specifications

The H100 comes in three distinct versions: H100 SXM, H100 PCIe, and H100 NVL. Each version is tailored for specific use-cases and offers different performance metrics. For instance, the H100 SXM is designed for maximum performance, while the H100 NVL is optimized for power-constrained data center environments. The H100 has been extensively tested and has proven its capabilities in real-world applications. It offers up to 30X acceleration in inference compared to its predecessor, the A100, and has shown to be 4 times faster in GPT-3 175B training.

Use Cases and Industries

The H100 is a versatile GPU that can be employed across a range of industries, from healthcare and automotive to financial services. Its high computational power and scalability make it ideal for data analytics, AI and ML training, and advanced graphics rendering. One of the standout features of the H100 is its Multi-Instance GPU (MIG) technology, which allows for secure partitioning of the GPU into as many as seven separate instances. This feature maximizes the utilization of each GPU and provides greater flexibility in provisioning resources, making it ideal for cloud service providers.

NVIDIA A100

Introduction to A100

The NVIDIA A100 Tensor Core GPU has been the industry standard for data center computing, offering a balanced mix of computational power, versatility, and efficiency. Built on the NVIDIA Ampere architecture, the A100 has been the go-to choice for enterprises looking to accelerate a wide range of workloads, from AI and machine learning to data analytics.

Versions and Specifications

The A100 comes in two versions: one with 40GB of memory and another with 80GB. The 80GB version offers double the memory, making it more suitable for workloads that require larger data sets, while the 40GB version is more cost-effective for smaller-scale applications. The A100 has been extensively tested in real-world scenarios and has proven to be a reliable workhorse for data center operations. It has been particularly effective in accelerating machine learning models and has set several performance benchmarks in the industry.

Use Cases and Industries

The A100 is versatile and finds applications in various sectors, including healthcare, automotive, and financial services. Its computational power and efficiency make it a preferred choice for running complex simulations, data analytics, and machine learning algorithms. One of the standout features of the A100 is its Multi-Instance GPU (MIG) capability, which allows for the partitioning of the GPU into seven separate instances, thereby maximizing resource utilization and offering greater flexibility for cloud service providers.

NVIDIA L40S and Its Comparison with Other GPUs

The NVIDIA L40S is a powerhouse GPU built on the Ada Lovelace architecture. It boasts an impressive 91.6 teraFLOPS of FP64 and FP32 performance, making it a formidable competitor in the high-performance computing arena. With 48GB of GDDR6 memory and a bidirectional memory bandwidth of 64GB/s, it's designed to handle data-intensive tasks with ease. The L40S also features 568 Tensor Cores and 142 RT Cores, providing robust capabilities for AI and ray tracing applications.

Detailed Specifications Table

- Computational Power: The L40S clearly outperforms the A100 in FP64 and FP32 performance, making it a more powerful choice for high-performance computing tasks. However, the H100 series, especially the H100 NVL, shows a significant leap in computational power, particularly in FP64 and FP32 metrics.

- Memory and Bandwidth: While the A100 offers HBM2e memory, the L40S opts for GDDR6. The H100 series goes a step further with HBM3 memory, offering the highest memory bandwidth among the three. This makes the H100 series particularly well-suited for data-intensive tasks.

- Tensor and RT Cores: The L40S is the only GPU among the three to offer RT Cores, making it a better option for real-time ray tracing. However, all three GPUs offer Tensor Cores, crucial for AI and machine learning tasks.

- Form Factor and Thermal Design: The L40S and A100 are relatively similar in form factor and thermal design, but the H100 series offers more flexibility, especially in its NVL version, which is designed for more demanding, power-constrained data center environments.

- Additional Features: All three GPUs offer virtual GPU software support and secure boot features. However, only the L40S and H100 series offer NEBS Level 3 readiness, making them more suitable for enterprise data center operations.

- Versatility: The L40S stands out for its versatility, offering a balanced set of features that make it suitable for a wide range of applications, from AI and machine learning to high-performance computing and data analytics.

The L40S is designed for a variety of applications, from AI and machine learning to high-performance computing and data analytics. Its robust feature set makes it a versatile choice for both small and large-scale operations.

Practical Implications: Making the Right Choice

When it comes to selecting a GPU for your organization, the choice is far from trivial. The right GPU can significantly impact the efficiency and effectiveness of your computational tasks, whether they involve AI, machine learning, or high-performance computing.

- Workload Requirements: Different GPUs excel in different areas. For instance, the NVIDIA A100 is a versatile choice for a range of applications but may not offer the specialized capabilities of the L40S or H100 series for tasks like real-time ray tracing.

- Cost vs Performance: While the L40S and H100 series offer superior performance, they also come at a higher cost. For example, accessing the H100 on E2E Cloud costs 412 rupees per hour, while the A100 costs 170 rupees per hour for the 40GB version and 220 rupees for the 80GB version. Organizations must weigh these benefits against the financial implications, especially if only smaller applications are required, where the A100 could be a more cost-effective option.

- Energy Efficiency: Newer GPUs often offer better performance per watt, which can lead to long-term energy savings. For instance, the NVIDIA A100 has a max power consumption ranging from 250W to 400W depending on the version, the L40S consumes up to 350W, and the H100's thermal design power (TDP) can go up to 700W in its most powerful configuration. While the L40S and H100 series offer higher performance, they also consume more power, making the A100, particularly the 40GB version, a more energy-efficient option for certain tasks

E2E Cloud: Bridging the Gap

As the demand for high-performance computing, AI, and machine learning capabilities continues to grow, the cost and complexity of implementing and maintaining such technologies also rise. This is particularly challenging for smaller organizations that may not have the resources for a large upfront investment in hardware.

E2E Cloud offers a viable solution to this challenge by providing access to top-of-the-line GPUs like the A100 and H100 on its cloud platform. This eliminates the need for a hefty initial investment, making these powerful computing resources accessible to a broader range of organizations. Given the long waitlist for purchasing the latest GPUs, accessing them from E2E Cloud offers a distinct advantage. It allows organizations to get their hands on cutting-edge technology without the wait, enabling them to stay competitive and agile in a fast-paced market.

E2E Cloud offers the unique advantage of allowing organizations to access GPUs, providing a practical and cost-effective solution to meet varying computational needs. With the L40S expected to be available for access by the end of 2023, organizations have the opportunity to test this new GPU in a real-world environment before making a long-term commitment. By offering a flexible and affordable solution, E2E Cloud is bridging the gap between the computational needs of organizations and the resources required to meet them.

Conclusion: The Future of GPU Computing

The landscape of GPU computing is evolving at an unprecedented pace, with each new model promising groundbreaking advancements in performance, scalability, and efficiency. The NVIDIA L40S, A100, and H100 each offer unique advantages and limitations, making the choice of GPU a critical decision for organizations looking to invest in AI, machine learning, or high-performance computing.

While the A100 has been a reliable workhorse, tested and proven in various applications, and the H100 offers cutting-edge performance at a premium price, the L40S stands as a promising newcomer. Its theoretical capabilities are impressive, but it remains to be seen how it will perform in real-world applications once it becomes available on E2E Cloud by the end of 2023.

References

- Official NVIDIA website. https://www.nvidia.com/en-in/data-center/l40s/

- NVIDIA Datasheet. https://resources.nvidia.com/en-us-l40s/l40s-datasheet-28413

- PNY https://www.pny.com/nvidia-l40s

- Official NVIDIA website. https://www.nvidia.com/en-in/data-center/h100/

- Official NVIDIA website. https://www.nvidia.com/en-in/data-center/a100/